Overview

In this lesson, you'll learn how to use to represent words and documents with vectors of features.

Outcomes

After completing this lesson, you'll be able to ...

- represent words as feature vectors

- represent documents as feature vectors

- define simple feature functions

- describe feature vocabularies

Feature vectors

Imagine you are presented with the following table:

| round? | long? | vegetable? | green? | |

|---|---|---|---|---|

| ??? | False | True | True | True |

| ??? | True | False | True | True |

| ??? | True | False | False | True |

Can you guess what the rows might correspond to based on the column values?

🤔 💭| round? | long? | vegetable? | green? | |

|---|---|---|---|---|

| broccoli | False | True | True | True |

| cabbage | True | False | True | True |

| watermelon | True | False | False | True |

Here is one possible assignment. You may have thought of other things that met the criteria given for each row.

This is a example of how objects can be represented as feature vectors or an ordered sequence of feature values.

Each row in our table represents a particular object. Each column represents a particular feature (i.e., a measurable property). The value in each cell indicates the degree to which the object associated with that row exhibits the property of the column. In this case, it's all or nothing (True or False).

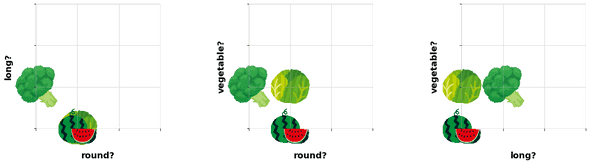

The number of columns indicates the number of dimensions in our feature representation. Using the values of these features, we can plot up to three or four1 dimensions, but after that it gets tricky. One option is to visualize pairs or triples of features at a time:

It's very important that we always order our features in the same way. For instance, if round? is the first feature in our "broccoli" feature vector, it has to be the first feature in the feature vector of every other object we're representing (i.e., a feature's position or index should always correspond to its column in our table).

We'll come to understand why this ordering is so important when we look at ways of comparing vectors in Unit 6.

Choosing the right features

When representing objects (i.e., a datapoint) with features, there are a couple of properties we want...

1. Similar representations for similar objects

We want similar things to end up with similar representations and dissimilar things to end up with dissimilar representations.

This principle can help us devise an appropriate set of features for our data.

Typically, we'll select a representation to solve a particular problem. For example, perhaps we want to classify emails as spam or not spam. We might devise a representation that looks something like the following:

| contains URL? | from a prince? | contains 'click here'? | contains 'transfer funds'? | |

|---|---|---|---|---|

| email1 | True | False | False | False |

| email2 | False | True | False | True |

| email3 | False | False | False | False |

| email4 | True | False | True | False |

If the email representations are labeled as SPAM or NOT SPAM, one might end up devising a decision process like the following:

2. Discriminative features

No features should have the same value for all objects.

Returning to our first example, notice that the rightmost column is true for all objects:

| green? | |

|---|---|

| broccoli | True |

| cabbage | True |

| watermelon | True |

This doesn't help to distinguish these objects at all. Unless we expect to compare against at least some non-green objects, this feature isn't useful.

Our features together represent an observable world: if two objects have the same representation, we cannot distinguish between them (we're describing them in the same terms).

Let's look at the remaining features:

| round? | long? | vegetable? | |

|---|---|---|---|

| broccoli | False | True | True |

| cabbage | True | False | True |

| watermelon | True | False | False |

These features are sufficient to give us a unique representation for each object:

broccoli = [False, True, True]

cabbage = [True, False, True]

watermelon = [True, False, False]Typically, we'll also want some automated way of estimating the values of these features. Perhaps that's looking something up in a database or knowledge base (ex. wikipedia). We'll revisit this in our discussion of feature functions and later in the context of text classification and supervised machine learning.

Feature values

Binary features

Sometimes we may only care whether or not some property holds for an object. Our features can be binary:

| round? | long? | vegetable? | |

|---|---|---|---|

| broccoli | False | True | True |

| cabbage | True | False | True |

| watermelon | True | False | False |

We can represent True and False with 1 and 0:

| round? | long? | vegetable? | |

|---|---|---|---|

| broccoli | 0 | 1 | 1 |

| cabbage | 1 | 0 | 1 |

| watermelon | 1 | 0 | 0 |

Continuous-valued features

Some features are better represented using continuous values. These can use whatever range you'd like:

| sentiment_score | |

|---|---|

| elated | 3 |

| worried | -1.6 |

| depressed | -3 |

In later lessons, we'll revisit mixed scale representations and think about when and why we might want to standardize.

Feature functions and feature extraction

While we can dream up all sorts of features, there are some practical considerations.

Generally speaking, our object representations or feature vectors will be used as input to some task (ex. email classification). If we're trying to automate that task, we certainly don't want to have to ask humans to manually assign feature values whenever we need to classify a new object (ex. email). If we're going to do that, we might as well ask them to classify the object!

Instead, we really need some automated way of estimating the values of our features.

A feature function is a function used to estimate the value of some feature. A feature function might look something up in a database or knowledge base (ex. Wikipedia) or perhaps checking for the presence of some prefix or suffix:

def ends_in_er(tok):

return 1 if tok.endswith("er") else 0

ends_in_er("simpler")Depending on the task we have in mind, we might have a bunch of small feature functions to help construct our complete representation or rely on just one that generates a bunch of features of the same basic type (ex. all chars of length 3). We call the process of converting some object into a vector2 of feature values feature extraction.

Feature vocabulary

The set of features we use to represent a collection of objects is called a feature vocabulary3.

Thinking back to our first example, the size of a feature vocabulary equals the number of columns in our table of features.

In natural language processing, you'll learn that it is not uncommon to have a vocabulary comprised of hundreds or thousands of features.

Features for words and documents

We looked at some examples of features, but what kinds of features are commonly used to represent words and documents (i.e., sentences, paragraphs, tweets, emails, articles, etc.)? Here are a few...

- character sequences

- useful for representing individual words or tokens

- ex. starts with "pre", ends with "ed", etc.

- token/word sequences

- useful for representing documents

- ex.

("click", "here"),("is", "a"), etc.

- word shapes

These can either take binary (1 or 0) or continuous (ex. frequency counts) values.

We'll learn more about character and token/word sequences in our lesson on -grams. As we'll see, such features works surprisingly well for many classification tasks.

Next steps

You now understand the basics of representing words and documents as vectors (defining feature functions, creating vocabularies, etc.). Before learning about -grams, let's practice ...

Practice

Word shapes for what?

- Name a classification task where information on word shape could be useful.

- What sorts of words can be easily distinguished by word shape?

Other features

- Can you think of other features for representing words or documents that could be useful?

- How would you estimate these features?

- Write a feature function for estimating/measure the value of one such feature. The function should take a word or document (string or sequence of tokens).

Feature extraction

- Consider the following sequence of words:

["fight", "fought", "sang", "sung", "hit", "hang", "eat", "ate"]- Write a feature function that returns all vowels or vowel sequences for a word.

- ex.

vowels("fight")["vowel=i"]

- Write a feature function that returns the first character of each word.

- ex.

first_char("fight")["firstchar=f"]

- Calculate your set of features across all words and sort them alphabetically to create a feature vocabulary .

- What is the size of ()?

- Using a table or spreadsheet, represent the feature vector of each word where the columns of the table are features and the rows of the table are words.

- Which words appear to have similar representations? Which words seem dissimilar?